To watch this as a 5-minute video

To listen to it as a 5-minute podcast

I am an absolute beginner in AI. I enjoy approaching Generative AI by finding out what AI can NOT do.

Driven by curiosity, a thirst for learning, and equipped with a questioning lens, I attended the three-day GenAI Summit 2024 in San Francisco, May 29-31, 2024.

My head spun dizzyingly for three days straight. I nearly drowned from drinking too much AI water from the firehose. After filling up half a notebook, clicking a thousand (almost) photos of slides of a dozen speakers, I am sharing with you what interested me the most (and they are not technically focused).

Towards the end of this article I have a glossary of “AI Jargon for ‘Non-Nerds’ and AI Newbies”, to help those who are not data scientists or software engineers.

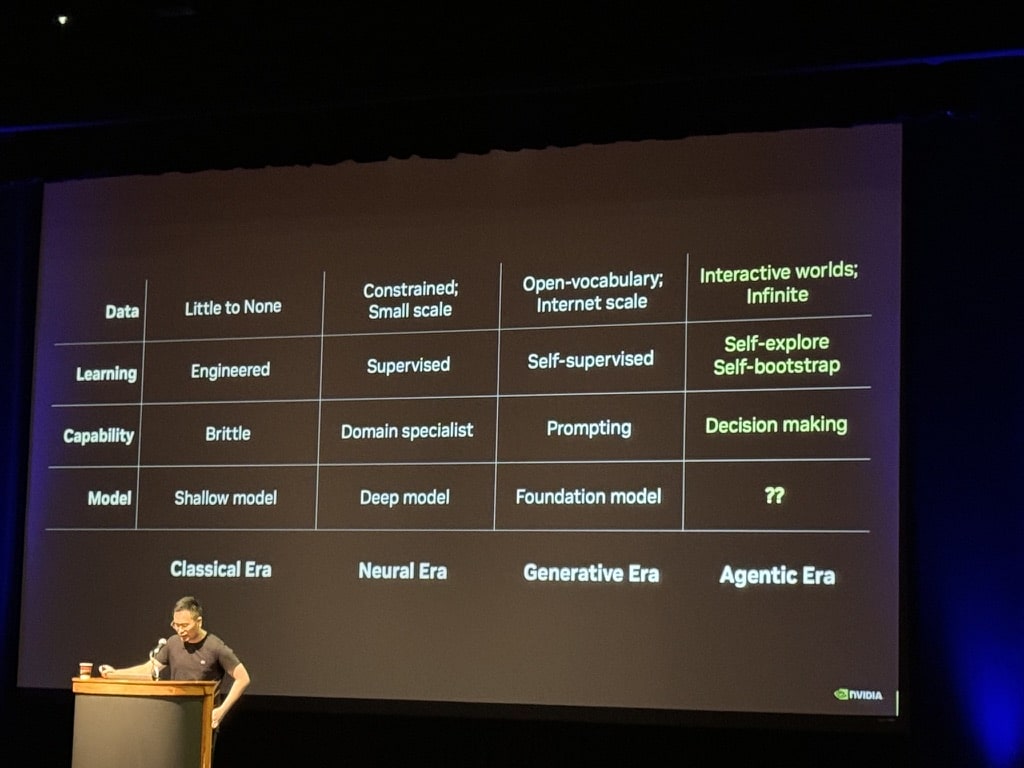

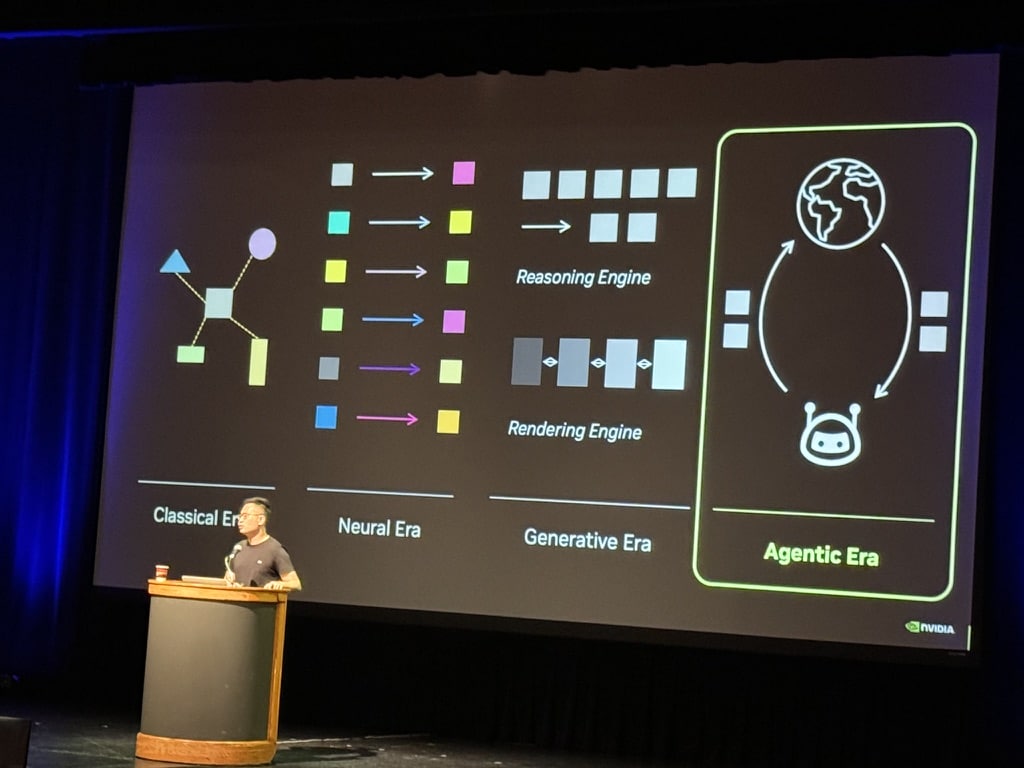

“Zero to trillion: since AI robotic humanoid is still being created, today it is zero, but once it is developed, it will be a trillion dollar business, said Jensen Huang.” – Dr. Jim Fan, Senior Research Scientist at NVIDIA and leader of the AI Agents Initiative, quoting Jensen Huang, CEO of Nvidia.

“Bill Gates says AI could kill Google Search and Amazon as we know them”, CNBC, May 22, 2023.

“AI is changing so fast, it is like climbing a mountain, and the mountain is moving!” “There will be a product-led growth, like a viral effect … It is the AI network effect: value goes up for everyone!” – Jeremiah Owyang, Blitzscaling Ventures General Partner.

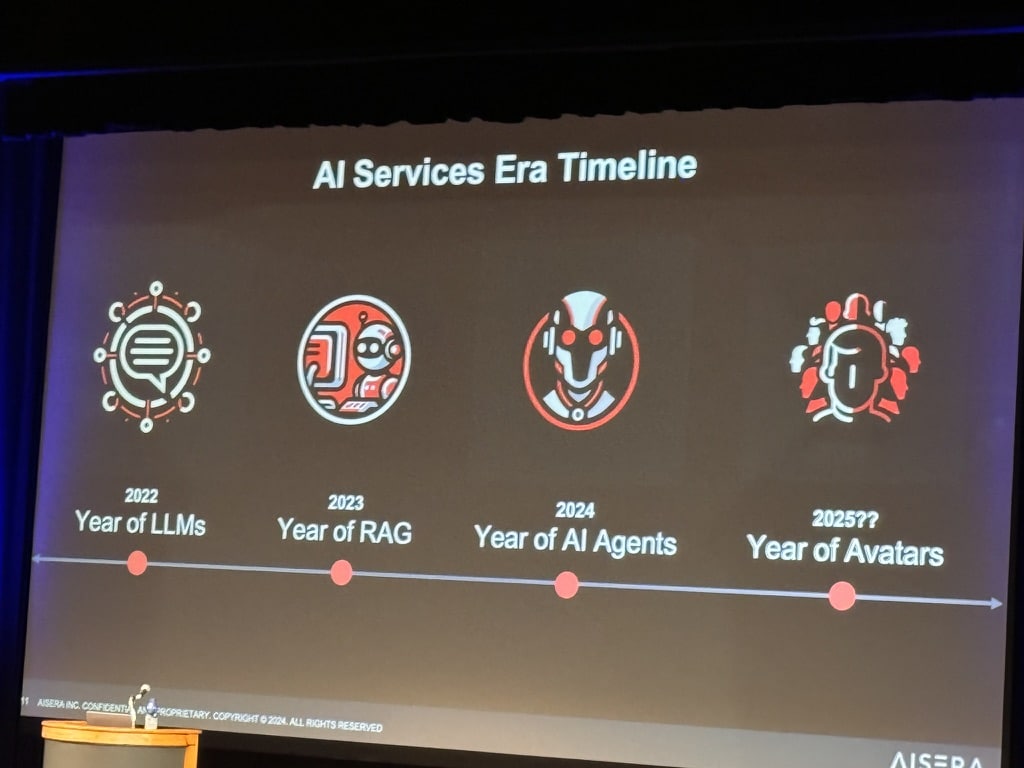

“In 2 months, massive disruption will be happening!” “Just pay attention to next week’s news! (not about Donald Trump, but about AI.)” – Dr. Muddu Sudhakar, Co-Founder & CEO, Aisera.

“The speed of AI growth the likes of which has never been seen. There will be a massive job creation.” Joanne Chen, General Partner at Foundation Capital.

“SF is the AI center among all continents. In just April, 2024, there are 146+ in-person AI events in the SF Bay Area.”– Jeremiah Owyang, Blitzscaling Ventures General Partner.

We are NOT getting close to AGI (artificial general intelligence) any time soon, said most of the experts, because there are many obstacles and bottlenecks to overcome.

A closer look at Bill Gates’ statement reveals that although a future AI personal assistant will bring profound changes, and the “technology could radically alter user behaviors, resulting in people never needing to visit a search website again, use certain productivity tools or shop on Amazon,” Gates said “It will take some time until this powerful future digital agent is ready for mainstream use.”

Here are a few samples of some of the challenges:

– LLM based solutions take longer for enterprise software (not simple rule based as before). API costs a lot!

– Vertical apps still need humans in the loop, they are tailored, cannot do complex, multi-level reasoning.

– For enterprises to adopt Agent: each enterprise faces the same problems, but wage different battles: ROI, price efficiency, accuracy, funding user-based LLM, language translation, use cases, model training, fine tuning, evolving; brand damages and reputation risks, compliance, user loyalty, user trust …

– Today, it is harder to build software (even though it is easier to build demos or prototypes).

– It takes mega rounds of investment to build infrastructure, security, models, and data. VC investment is heavily favored towards incumbents, the legacy companies that have both hardware and software. They have used open source and already have something to sell, with distribution advantage, build, design, manufacturing, supply chain, control loop. There will be lots of carnage among smaller and more recent startups.

– A “Forget” button needs to be developed, for data to UNlearn.

(To be continued in Pt. 2 of 2 )

To read Pt. 2 as a 5-minute blog

To watch Pt. 2 as a 6-minute video

To Hear Pt. 2 as a 6-minute podcast)

RAG: Retrieval-augmented generation:

https://blogs.nvidia.com/blog/what-is-retrieval-augmented-generation/

cRAG: Causal grounding in Causal AI.

LAMs: Large Action Models: hot topic and development in the realm artificial intelligence (AI) is Large Action Models, also referred as Large Agentic Models or LAMs https://medium.com/version-1/the-rise-of-large-action-models-lams-how-ai-can-understand-and-execute-human-intentions-f59c8e78bc09

API: “Application Programming Interface”. In the context of APIs, the word Application refers to any software with a distinct function. Interface can be thought of as a contract of service between two applications. This contract defines how the two communicate with each other using requests and responses.

SLM: SLM stands for Small Language Model, which is a machine learning model in artificial intelligence (AI) that is typically based on a large language model (LLM) but is much smaller in size. SLMs are often trained with domain-specific data and can be used for a variety of tasks, including:

Data engineering: SLMs can help with complex data engineering tasks and specialized problems.

User prompts: SLMs can enrich user prompts and make responses more accurate.

Conversational AI: SLMs can provide quick and relevant responses in real-time, even when operating within the resource constraints of mobile devices or embedded systems.

Small Language Models (SLMs) are compact versions of language models that offer a more efficient and versatile approach to natural language processing. These nimble models play a crucial role in handling data processing efficiently, especially in scenarios where large language models (LLMs) may be overkill.

Grounding: Grounding in AI is the process of connecting abstract knowledge in AI systems to real-world examples, such as by providing models with access to specific data sources. This process can help AI systems produce more accurate, relevant, and contextually appropriate outputs.

RDF: The Resource Description Framework (RDF) is a World Wide Web Consortium (W3C) standard originally designed as a data model for metadata. It has come to be used as a general method for description and exchange of graph data. RDF is a standard model for data interchange on the Web. RDF has features that facilitate data merging even if the underlying schemas differ, and it specifically supports the evolution of schemas over time without requiring all the data consumers to be changed.

(Wikipedia:) The Resource Description Framework (RDF) is a World Wide Web Consortium (W3C) standard originally designed as a data model for metadata. It has come to be used as a general method for description and exchange of graph data. RDF provides a variety of syntax notations and data serialization formats, with Turtle (Terse RDF Triple Language) currently being the most widely used notation.

RDF is a directed graph composed of triple statements. An RDF graph statement is represented by: 1) a node for the subject, 2) an arc that goes from a subject to an object for the predicate, and 3) a node for the object. Each of the three parts of the statement can be identified by a Uniform Resource Identifier (URI). An object can also be a literal value. This simple, flexible data model has a lot of expressive power to represent complex situations, relationships, and other things of interest, while also being appropriately abstract.

SRAM: Static Random-Access Memory (SRAM) is a popular choice for Artificial Intelligence (AI) chips because it’s fast, reliable, and locally available within the chip, providing instant access to data. SRAM is also compatible with CMOS logic processes, which allows it to track improvements in logic performance as technology advances. However, SRAM can be expensive and consume a lot of energy and space.

SRAM is recommended for small memory blocks and high-speed requirements but comes associated with drawbacks such as its substantial area and increased power consumption. For projects with significant memory requirements, ReRAM offers an advantage owing to its density.

SRAM is made up of flip-flops, which are bistable circuits made up of four to six transistors. Once a flip-flop stores a bit, it keeps that value until it’s overwritten with the opposite value. SRAM comes in two varieties: synchronous and asynchronous. Synchronous SRAMs are synchronized with an external clock signal, and they only read and write information to memory at certain clock states.

SRAM is a locally available memory within a chip. Therefore, it offers instantly accessible data, which is why it is favored in AI applications.

https://semiengineering.com/sram-in-ai-the-future-of-memory/

MLM: Machine Learning Morphism, a building block for designing machine learning workflows. MLM’s have these components: Input space, X , which is modeled as a probability space and has training realizations X . Output space, Y which models the outcome of interest.

Knowledge graph: (Wikipedia:) A knowledge graph formally represents semantics by describing entities and their relationships. Knowledge graphs may make use of ontologies as a schema layer. By doing this, they allow logical inference for retrieving implicit knowledge rather than only allowing queries requesting explicit knowledge.

Data base vector: A vector database stores pieces of information as vectors. Vector databases cluster related items together, enabling similarity searches and the construction of powerful AI models. https://www.cloudflare.com/learning/ai/what-is-vector-database/

Wrapper: In the context of AI, a “wrapper” typically refers to a piece of software or code that encapsulates or wraps around another piece of code or a system, providing a simplified or standardized interface for interacting with it.

For example, in machine learning, a wrapper might be used to encapsulate a complex algorithm or model, allowing it to be easily integrated into a larger software system without the need for the developers to understand all the details of how the algorithm works.

In other contexts, a wrapper might be used to provide additional functionality or features around an existing system, such as logging, error handling, or security.

Overall, wrappers are often used to simplify the process of integrating different components or systems within a larger software application or framework.

MOAT: In the context of AI, “MOAT” typically stands for “Model of Adversarial Training.” Adversarial training is a technique used in machine learning to improve the robustness and resilience of models against adversarial attacks.

In this context, a “moat” refers metaphorically to a protective barrier surrounding a castle or fortress. Just as a physical moat defends a castle from attackers, a “Model of Adversarial Training” helps defend machine learning models from adversarial attacks by training them on adversarially perturbed examples.

The goal of adversarial training is to expose the model to small, carefully crafted perturbations to input data during the training process. This helps the model learn to make more robust predictions, even when faced with adversarial examples designed to fool it.

RPA: Robotic Process Automation (RPA) is a software technology designed to simplify the creation, deployment, and management of software bots that mimic human actions and interactions with digital systems and software.

OCR: “Optical Character Recognition.” It is a technology that recognizes text within a digital image. It is commonly used to recognize text in scanned documents and images. OCR software can be used to convert a physical paper document, or an image into an accessible electronic version with text.

(Feel free to add more.)

© Joanne Z. Tan All rights reserved.

====================================================================================

– To stay in the loop, subscribe to our Newsletter

– Download free Ebook

Please don’t forget to like it, comment, or better, SHARE IT WITH OTHERS, – they will be grateful!

(About 10 Plus Brand: In addition to the “whole 10 yards” of brand building, digital marketing, and content creation for business and personal brands. To contact us: 1-888-288-4533.)

– Visit our Websites:

Phone: 888-288-4533

– Find us online by clicking or follow these hashtags: